Now that ChatGPT is all the rage as a standalone get answers and generate content machine; people and developers are asking the next logical question – how can I integrate ChatGPT into my website?

Integrating ChatGPT into a website is technically not that difficult. It is the set-up, dataset id, dataset collection and learning process that is a little difficult and time consuming – and most of that work is actually not done by website developers but by the business/product owners.

The first step will be to identify the Use Cases such as where ChatGPT can be helpful to the different website visitors to your site. Example some will be people just looking to see what you do, some will be potential customers looking for product information and some will be current customers looking for help.

UX Is For Chat?

This is the User Experience (UX) research part of the ChatGPT integration. You need to identify what are the different visitors to your website, so you can figure out what type of information they might look for – which will lead you to detailing what are the questions they might ask and the type of data they will want.

ChatGPT can answer anything, but when integrating it into a website, you really only want it answering questions that are relative to your website and the business you are trying to do. This means that ChatGPT will need to be set-up to use a very specific and narrow dataset to answer chats and generate content.

So, this UX part is important because it will help you generate and gather your dataset that the ChatGPT will use to lead and generate discussions and interactions with your website visitors. Once the Visitor Profiles are defined, and a questions/data matrix filled out; you will have a clear understanding of the type and kind of data that will need to be in the dataset that ChatGPT will use for your website.

Data Hungry

The Dataset that ChatGPT will use as the basis for all its content and discussions is the most important part of the ChatGPT integration into your website. The more detailed and deep the dataset is, the better and more useful your ChatGPT website integration will be.

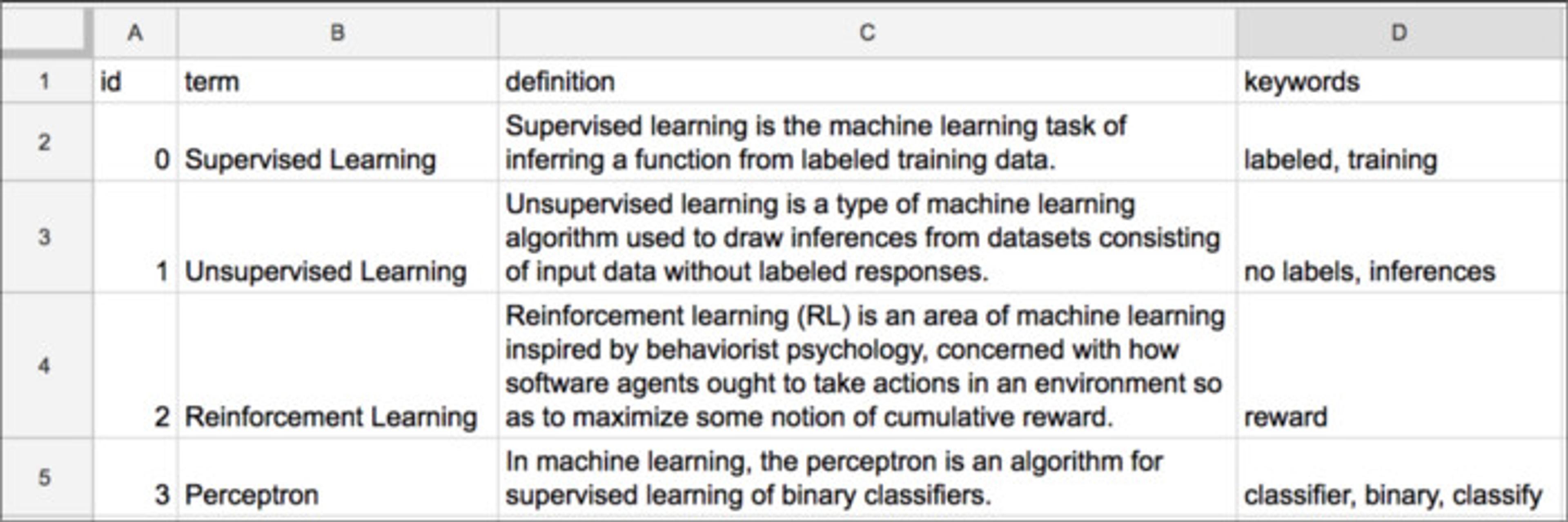

Your datasets may be as simple as a .xls spreadsheet of FAQ’s, but it could also be a spreadsheet of the basic processes that the website users would do to use the website, such as the process to register, contact the company. Another good dataset might be a logic tree of all the business options available by your site for visitors.

For many, spreadsheets might be the most convenient or easiest way to generate your ChatGPT dataset. The reason is that they are a convenient way to organize data in tabular form, with each row representing a data point and each column representing a feature or attribute of the data. When using a spreadsheet to store data for ChatGPT, you can use one row for each question and its corresponding answer, and you can use different columns to store information such as the question category, keywords, or any other relevant metadata. You can also use separate worksheets within the same spreadsheet to store different data or different versions of the same data.

1. It is important to provide a combination of user prompts and corresponding model-generated responses as your data format. For example, you can pair a user question with the model-generated answer or response. This paired input will be used to train the model.

2. For Use cases, you can also format a use case by having questions like “Do you have a use case related to the auto industry?” as the user prompt/column; and the Case study text as the answer/column.

Most popular spreadsheet programs like Excel or Google Sheets that are widely used in business and academic settings offer a range of features such as sorting, filtering, and formatting that make it easy to work with and analyze data. Additionally, they support various file formats such as CSV, which is a common format for text data used in natural language processing (NLP) tasks like ChatGPT training.

Overall, using a spreadsheet like Excel to store data for ChatGPT can be a good choice, especially if you are already familiar with the program and its features. However, other formats like JSON, CSV, or SQL databases can also be used, depending on your specific needs and preferences.

Remember, use the questions/data matrix that you generated in the UX Visitor Profiles work to help you figure out the type of data your visitors are looking for. Use spreadsheets or any other database to generate and collect that data.

Example, if your website generates value estimates for a collection of art, and in order to do that there is a process the visitor must go through on the site to do that, then an important part of the data set would be a spreadsheet of each step of the process and any advice or notes for each step.

Let’s Clean

Once you have all the data files flushed out and in one place, the next step is to prepare the data. Cleaning, formatting, and splitting the data into train and test sets are important preprocessing steps to prepare data for training ChatGPT and it involves:

Cleaning:

Cleaning involves removing any unwanted characters, symbols, or noise from the text data. This ensures that the text is in a consistent format that ChatGPT can easily parse and understand. Cleaning may also involve correcting spelling errors, removing stop words, and normalizing text to lowercase.

Formatting:

Formatting involves converting the cleaned text data into a format that ChatGPT can use. This may involve tokenizing the text into individual words or phrases, adding special tokens to show the start or end of a sentence, or formatting the data as JSON or CSV. (Better to use .xls spreadsheets, since the data will already be organized and tokenized)

Splitting:

This involves dividing the formatted data into two data sets: the training set and the test set. The training set is used to train ChatGPT, while the test set is used to evaluate the model’s performance. The split is typically done randomly, ensuring that both sets have a representative sample of the data (Again, spreadsheets make this easier).

Get Set Up

Now that you are at the stage that the dataset is ready, it is now time to start the integration process. Obviously, some of this work can be done in parallel with the Data set prep, but the integration may take a lot less time than the data generation, organization and prep so there isn’t that much overlap.

The beginning of the integration process is to signup for OpenAI and then get an API key, which is broken down as follows:

- If you don’t already have a ChatGPT account , then you will need to signup for one.

- Once you have an account, login and in the and Click on the “Create an API Key” icon to set your API Key. Please note that users get a free credit of about $18 when they create an account on OpenAPI. However, after your free trial expires, you must pay $0.002 per 1000 tokens. 1000 tokens = about 750 words. In your OpenAI account, you will need to provide payment info and choose the plan usage level, once the free tokens have expired.

- Once your API Key is generated, you can use your Key by simply copying the code and pasting it on the spot that says enter API Key on the OpenAI API.

- Once you have the API Key, you or the developers are ready to start the integration process.

It’s A Choice

In order to start the integration development, you need to choose the integration method and determine how to integrate ChatGPT with the website. There are several integration methods available, including a chat widget , API integration , or a custom integration. Your website’s technology stack is going to be the key determining factor in choosing the best integration method and third-party tools.

Next you have to develop the Chat Interface for users to interact with ChatGPT. Developers can use third-party chatbot platforms like Dialogflow , Microsoft Bot Framework , or Amazon Lex .

Another option is to develop a custom interface from scratch by encompassing the developed ChatGPT model within an API wrapper. An API wrapper is a package that wraps all the XML API calls together into a cohesive unit which is easy to call. This may involve wrapping the model in a web API framework and setting up an API endpoint that can generate text:

1. You can install the client using pip, the Python package manager, or any other package manager supported by your development environment.

2. You should set up environment variables to store your API key securely. This is an optional step but is recommended to avoid hard-coding sensitive information in your code.

Training Wheels

Before the integration and testing can begin, it is crucial to train ChatGPT with relevant data to make it learn and understand the use cases and provide helpful responses. You can use OpenAI’s GPT-3/4 or a similar language model for this purpose.

Training ChatGPT involves feeding it with a large amount of relevant data to learn and understand the use cases and provide helpful responses (see previous section Data Hungry). The first step is to access OpenAI’s GPT-3/4 language model to train ChatGPT, and you need to sign up for the OpenAI API and pay for access to the model.

One approach to training ChatGPT is to use third-party services to simplify the training process. The exact implementation details can vary depending on the training framework and language model you are using. Popular libraries like Hugging Face’s Transformers or TensorFlow have built-in functionalities and APIs to facilitate data loading and the training process. It’s recommended to refer to the documentation and examples provided by the specific framework you’re using for detailed instructions on loading the dataset into the model. Here are 4 popular third-party training framework services:

Hugging Face’s Transformers :

Transformers is an open-source library by Hugging Face that provides a high-level API for training and fine-tuning various transformer-based models, including GPT-2 and GPT-3. It supports popular deep learning frameworks like PyTorch and TensorFlow.

OpenAI GPT-3 Playground :

OpenAI offers its own GPT-3 Playground, which provides a user-friendly interface for interacting with and training models based on GPT-3. It allows you to experiment with different prompts and parameters and observe the model’s responses in real-time.

TensorFlow:

TensorFlow is a widely used deep learning framework that offers powerful tools and libraries for training and deploying machine learning models. It provides TensorFlow Text, a library that offers text-specific preprocessing and tokenization capabilities, which can be useful for training ChatGPT.

PyTorch:

PyTorch is another popular deep learning framework known for its flexibility and ease of use. It provides tools and modules that can be leveraged for training language models like ChatGPT. Libraries like Transformers by Hugging Face offer PyTorch integration and make it convenient to train and fine-tune transformer models.

Loading your dataset into the ChatGPT model depends on the specific training framework or library you are using. Here’s a general overview of how you can load the dataset into the model:

1. Define Data Loading Mechanism:

Most training frameworks provide mechanisms for loading and processing data. You’ll typically need to define a data loading mechanism that reads the dataset and prepares it for training. This may involve writing custom code or using built-in functions and classes provided by the framework.

2. Batch Processing:

To efficiently train the model, we often process data in batches. The data loading mechanism should handle batch processing by loading a certain number of examples (input-output pairs) from the dataset at a time and feeding them into the model.

3. Tokenization:

Convert the textual input and output pairs into tokenized format suitable for the model. Tokenization breaks the text into smaller units, such as words or sub-words, and assigns a unique numerical representation (token) to each unit. This step ensures the model can process the text effectively.

4. Feeding the Data to the Model:

Once the dataset is prepared and tokenized, it can be fed into the model during the training loop. This involves passing the input tokens to the model and comparing the output with the corresponding target tokens to calculate the loss and update the model’s parameters.

Leveraging existing datasets such as Cornell Movie Dialogs Corpus or Twitter datasets can help provide a good starting point for training ChatGPT, or can augment your custom data set.

It’s Getting Better

Once the initial training has been completed, you will need to go through one or more iterations of a test and re-training loop:

1. Evaluate Model:

After training the model, evaluate it by testing it on sample data and assessing its performance in generating relevant and helpful responses.

2. Refine and Improve:

Based on the evaluation, refine and improve the model by adding more data, tweaking hyperparameters, or adjusting the training process. This may also involve adjusting the model parameters, data preprocessing, or training settings.

Once you have the ChatGPT API trained on your data, and you have gone through at least one test/feedback loop, and the web chatbot integration completed on your website; then you are ready to go live.

Once live, you will need to test the chatbot with basic conversations to test the responses of the chatbot to ensure it works as intended on the website.

As things change in your business, you add new people, products or services to your business, you will need to add new training to your ChatGPT training model. Besides business changes, you will need to regularly review and improve the chatbot’s performance based on user feedback, new use cases, and website technology changes and updates.

Conclusion

Adding ChatGPT to your website comprises building a complete and correctly formatted dataset, choosing the Chatbot integration for your website, getting the ChatGPT API key and training it on your dataset, testing and refining the API model and then launching it on your website.

As you can tell from the length of this article, it can be a complex and lengthy process; with the dataset generation, formatting and cleaning being the most challenging part for most people and businesses.

Even with this challenging work, ChatGPT, or any Chatbot for that matter, can really enhance a businesses web presence, and help grow your business.

For more info, check out our Podcast on ChatGPT .

ScreamingBox's digital product experts are ready to help you grow. What are you building now?

ScreamingBox provides quick turn-around and turnkey digital product development by leveraging the power of remote developers, designers, and strategists. We are able to deliver the scalability and flexibility of a digital agency while maintaining the competitive cost, friendliness and accountability of a freelancer. Efficient Pricing, High Quality and Senior Level Experience is the ScreamingBox result. Let's discuss how we can help with your development needs, please fill out the form below and we will contact you to set-up a call.